LOCI AI-Driven

Observability & Performance Intelligence

Shift Observability Left

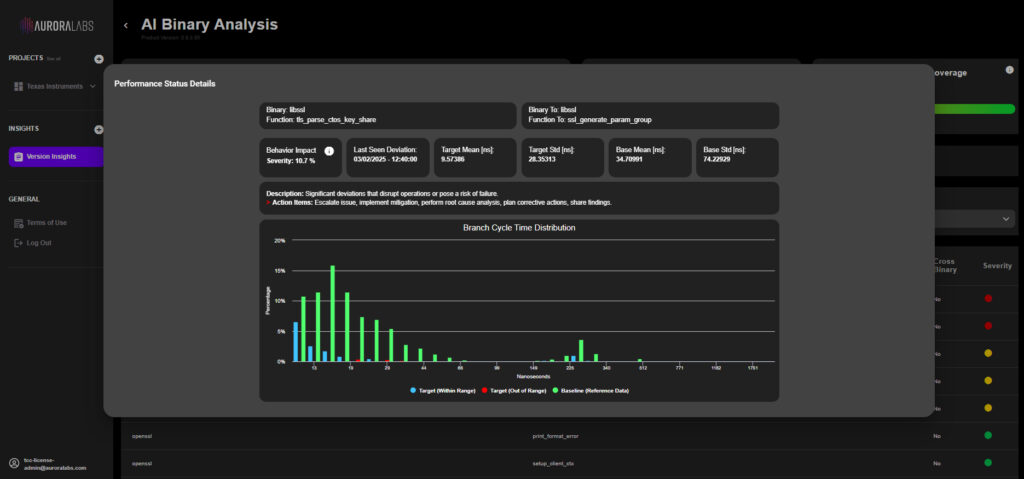

LOCI, Line-of-Code Intelligence platform, transforms observability and shift-left approach by extracting deep performance insights from compiled binary files, without requiring source code.

Traditional static analysis and observability tools fail to detect performance issues in compiled BIN files due to missing execution context, hardware interactions, and real-time software behavior analysis.

LOCI bridges this gap by modeling compiled binaries with real-world execution data, enabling it to:

- Predict performance impacts

- Detect degradations across software versions

- Identify power-hungry functions

- Optimize resource efficiency

- Provide test coverage references

- Enhance testing & validation

Converge static and dynamic analysis for early-stage optimizations

Unlock R&D Productivity & Reduce Time to Resolution

LOCI dramatically reduces Mean Time to Resolution (MTTR) and enhances key performance indicators (KPIs) by shifting performance analysis left in the development cycle.

Key Benefits:

Detects issues immediately after build

Minimizes costly late-stage performance issues

Faster performance debugging & fixes

Power efficiency gains

Rapid root cause analysis

Enhance testing optimization

Seamless CI/CD Integration for Early Optimization

LOCI integrates directly into CI/CD pipelines, providing real-time feedback post-build and eliminating the need for extensive post-deployment profiling. This ensures continuous monitoring, early anomaly detection, and reduced rollbacks/hotfixes, leading to a more stable and efficient release software cycle.

LOCI for Reliability, Availability, and Serviceability (RAS) in AI Inference

LOCI enhances AI inference with a robust Reliability, Availability, and Serviceability (RAS) solution for in-field device analytics.

Leveraging a local Deep Neural Network (DNN), this efficient and cost-effective vertical model comes equipped with an API for developers. LOCI’s RAS software stack predicts performance degradation, issues downtime probability alerts, and provides prescription updates, ensuring seamless communication and optimal performance across nodes.

This comprehensive approach empowers organizations to proactively manage their AI infrastructure, minimizing disruptions and maximizing efficiency.

Key Benefits:

Silent Data Corruption (SDC) Detection

Monitoring of PVT, ECC, GPU, CPU, and memory corruption with root cause analysis.

In-field – Monitoring

Degradation prediction of power, temperature, performance, CPU, and quality in real-time.

Temperature Behavior Analysis

Anomaly detection in specific dies and cores, pinpointing affected code sections.

Voltage Optimization

Voltage adjustments recommendations based on ECC increases and system performance.

System Insights and Performance Monitoring

Prediction of workload trends, detection of bottlenecks, optimization of cold startups, tracking of event deviations, and root cause analysis for improved system reliability and performance.

Specific Data Insights

Identifying issues such as missing data in databases, module comparisons, and code-specific problems down to the line and core level.

Our Technology

LOCI leverages Aurora Labs’ proprietary vertical LLM, known as Large Code Language Model (LCLM), that is specifically designed for compiled binaries.

Unlike general-purpose Large Language Models (LLMs), LCLM delivers superior, efficient, and accurate binary analysis and detection of software behavior changes on targeted hardware, offering deep contextual insights into system-wide impacts – without the need for source code.

The LCLM analyzes software artifacts and transforms complex data into meaningful insights. Unlike existing Large Language Models (LLM), LCLMI’s vocabulary is highly efficient (x1000 smaller) with reinvented tokenizers and effective pipeline training using only 6 GPUs.

This LCLM drives LOCI – our Line-Of-Code Intelligence technology platform.

LOCI goes beyond the static analysis of software and understands the context of the software behavior within a given functional flow. These insights enable LOCI to detect deviations that are anomalies to the expected and predicted software behavior before production deployment.

This LCLM drives LOCI – our Line-Of-Code Intelligence technology platform.

LOCI goes beyond the static analysis of software and understands the context of the software behavior within a given functional flow. These insights enable LOCI to detect deviations that are anomalies to the expected and predicted software behavior before production deployment.

About Aurora Labs

Aurora Labs is a domain expert in ML, NLP, and model tuning, pioneering data-driven innovation since 2017, developing a proprietary vertical large language model (LLM) known as Large Code Language Model (LCLM). This LCLM specializes in comprehensive system workload analysis focusing on power and performance for observability and reliability, accelerating the development of embedded systems, AI, and Data Center infrastructures.

Founded in 2016, Aurora Labs has raised $97m and has been granted 100+ patents. Aurora Labs is headquartered in Tel Aviv, Israel, with offices in the US, Germany, North Macedonia, and Japan.

For more information: www.auroralabs.com

Why Aurora Labs

- ML & LLM tuning expertise since 2017

- Proven track record of using ML/LLM to optimize embedded & HPC systems

- Innovation leader with over 100 granted patents in AI, Embedded Systems & Observability

- Commitment to industry standards, including ISO 27001, ISO 21434, ASPICE L2, ASIL B, and ISO 26262 Certified

- Trusted by Global Industry Leaders: LG, VW, AWS, ST Micro, Infineon, NTT Data and Toyota - TTC

- Global support network encompassing engineering, customer success, and operations

- Versatile solutions catering to startups, SMBs, and large enterprises, with flexible hosting options (Cloud, On-Premise, SaaS)

- Unparalleled expertise, boasting 300 development years of experience in code-level machine learning models for C/C++ on embedded software